Cover Story

THEIR NEW WEAPON

THE LATEST SCOURGE: ELECTRONIC CRIMINALS

AI technology is letting a new breed of scammer impersonate celebs, law enforcement—or even you

When a finance worker in Hong Kong was called in to a live videoconference by the chief financial officer of his multinational company in February, everything seemed normal. The CFO and other executives acted and sounded as they always did, even if the reason for his being dragged in was unusual: He was instructed to wire $25.6 million to several bank accounts. He, of course, did as the boss asked.

Amazingly, the “CFO” image and voice were computer-generated, as were those of the other executives who appeared on the call. And the accounts belonged to scammers. The worker was the victim of a stunningly elaborate artificial intelligence scam, according to local media reports. The millions remain lost.

Welcome to the dark side of AI technology, in which the voices and faces of people you know can be impeccably faked as part of an effort to steal your money or identity.

Scientists have been programming computers to think and predict for decades, but only in recent years has the technology gotten to the level at which a computer can effectively mimic human voices, movement and writing style and—more challenging— predict what a person might say or do next. The public release in the past two years of tools such as OpenAI’s ChatGPT and DALL-E, Google’s Gemini (formerly Bard), Microsoft’s Copilot and other readily available generative AI programs brought some of these capabilities to the masses.

We are entering an “industrial revolution for fraud criminals.”

—Kathy Stokes

AI tools can be legitimately useful for many reasons, but they also can be easily weaponized by criminals to create realistic yet bogus voices, websites, videos and other content to perpetrate fraud. Many fear the worst is yet to come. We’re entering an “industrial revolution for fraud criminals,” says Kathy Stokes, AARP’s director of fraud prevention programs. AI “opens endless possibilities and, unfortunately, endless victims and losses.”

Criminals are already taking advantage of some of those “endless possibilities.”

▶︎ Celebrity scams. A “deepfake” (that is, a computer-generated fake version of a person) video circulated showing chef Gordon Ramsay apparently endorsing HexClad cookware. He wasn’t. Later, a similar deepfake featured Taylor Swift touting Le Creuset. The likenesses of Oprah Winfrey, Kelly Clarkson and other celebs have been replicated via AI to sell weight loss supplements.

▶︎ Fake romance. A Chicago man lost almost $60,000 in a cryptocurrency investment pitched to him by a romance scammer who communicated through what authorities believe was a deepfake video.

▶︎ Sextortion. The FBI warns that criminals take photos and videos from children’s and adults’ social media feeds and create explicit deepfakes with their images to extort money or sexual favors.

Eyal Benishti, CEO and founder of the cybersecurity firm Ironscales, says AI can shortcut the process of running virtually any scam. “The superpower of generative AI is that you can actually give it a goal; for example, tell it, ‘Go find me 10 different phishing email ideas on how I can lure person X.’ ”

Governments are scrambling to keep up with the fast-evolving technology. The White House in late 2023 issued an executive order calling for increasing federal oversight of AI systems. It’s a technology, it noted, that “holds extraordinary potential for both promise and peril.” That led to the establishment of the U.S. AI Safety Institute within the U.S. Department of Commerce to “mitigate the risks that come with the development of this generation-defining technology,” as Commerce Secretary Gina Raimondo put it.

As it turns out, AI may be our best tool for countering the malicious use of AI.

Benishti’s company develops AI software that detects and prevents large-scale phishing attempts and ransomware attacks. AI also is a key tool for detecting suspicious transactions at your bank, for example, flagging unusual charges on your credit card and blocking scam calls and texts.

The problem, says Craig Costigan, CEO of Nice Actimize, a software company that develops technology to detect and prevent financial fraud, is that “most of these scams and frauds are done by folks using the exact same tools as we use—but they don’t have to abide by the rules.”

AI technology also is used to tackle robocalls, says Clayton LiaBraaten, Truecaller’s senior strategic adviser. “If we see phone numbers generating hundreds of thousands of calls in a few short minutes, our models identify these patterns as suspicious. That gives us a very early indication that a bad actor is likely behind those calls.”

Banks use predictive AI as well. Costigan’s company, Nice Actimize, creates AI-based software that financial institutions use to sift through vast amounts of data to detect anomalies in individuals’ patterns, he explains. “It could be that someone is withdrawing $50,000, which is an unusual amount. It could be the location of the IP address. Why is the transaction happening in London?”

What’s possibly more alarming is voice cloning in an industry that for so long has used verbal confirmation to authorize transactions, Costigan says. “Fraudsters can call up and say, ‘Hi, move this money for me.’ And that voice sounds exactly like you. That’s a problem today.”

Banks are considering going beyond voice confirmation, so “you may also get a single follow-up question, like what’s your favorite color,” Costigan says. “They may now even require something additional that validates that you are you.”

Consumers have a role in protecting themselves, Benishti says, by understanding that “they cannot 100 percent trust communication, especially unsolicited.” Fraud fighters need to be ready to adjust their strategies as scammers are “very astute technologists and accomplished psychologists,” with evolving techniques, LiaBraaten says. “It’s a cat-and-mouse game,” he adds. “We just have to stay ahead of them.”

Christina Ianzito covers fraud and other issues for aarp.org.

YOUR VICTORIES

Busted!

SCAM ARTISTS WERE ON THE ATTACK IN 2023—BUT SO WAS LAW ENFORCEMENT. HERE ARE SOME OF THE MOST SIGNIFICANT PROSECUTIONS AND FRAUD TAKEDOWNS OF THE YEAR

TRICKBOT SCAMMERS BEHIND BARS

In January, a 40-year-old Russian man was sentenced to more than five years in prison for his role in the so-called TrickBot cyberattack gang, a group of criminals who deployed ransomware that infected millions of computers in the U.S. and elsewhere, allowing the gang to pilfer banking passwords and other financial information. They used the information to steal tens of millions of dollars from unsuspecting individuals and businesses, and to disable the computer systems of schools, hospitals and other organizations. Vladimir Dunaev was the second of nine gang members charged to plead guilty. The TrickBot scammers shut down their servers in 2022.

‘KING OF CRYPTO’ FACES DECADES IN PRISON

In November, a New York jury found FTX cryptocurrency exchange founder Sam Bankman-Fried, 31, guilty of seven counts of felony fraud and conspiracy. The former billionaire was sentenced on March 28 to 25 years in prison and ordered to pay more than $11 billion. Bankman-Fried was charged in 2022, after the bankruptcy of FTX, which had been one of the largest cryptocurrency exchanges in the world. At the time of the bankruptcy, $8 billion in FTX customer money was missing. Bankman-Fried was found guilty of stealing billions from the company and using customer money to buy property and make political donations, and to repay lenders to a second crypto trading firm he ran.

A RAID ON A DIGITAL CRIMINALS’ BANK

More and more often, criminals turn to the internet and cryptocurrencies to launder their profits. In March 2023, the FBI shut down ChipMixer, a cryptocurrency “mixer” that officials say laundered more than $3 billion since 2017, much of it generated through fraud schemes. The site, which federal prosecutors say was run from Hanoi, Vietnam, by Minh Quoc Nguyen, 49, blended criminal proceeds with multiple cryptocurrencies, diluting depositors’ funds until they were exceedingly difficult for investigators to trace. ChipMixer has been shut down, but Nguyen, who faces felony charges and is on the FBI Cyber’s Most Wanted list, remains a fugitive.

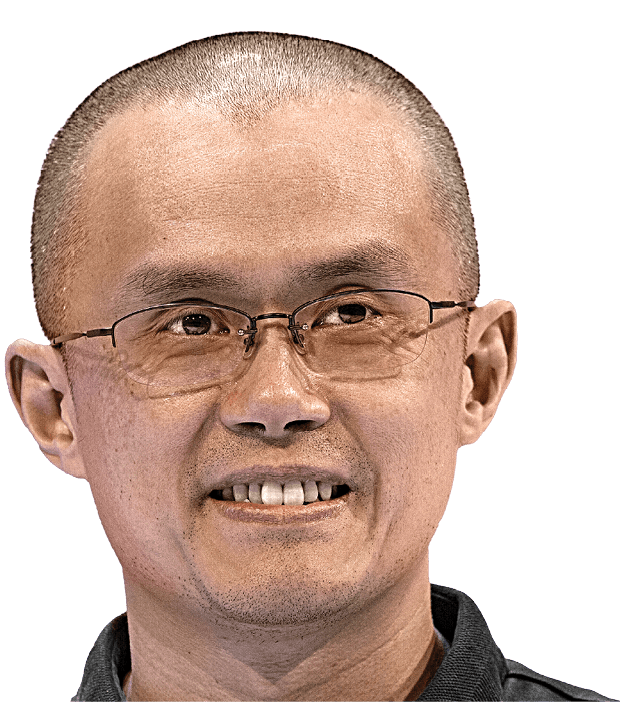

BOGUS CRYPTO EXCHANGE CHARGED BILLIONS IN FINES, AND CEO PLEADS GUILTY

In another high-profile cryptocurrency case, Binance and its CEO, Changpeng Zhao, 46, better known as CZ, pleaded guilty last November to violating the Bank Secrecy Act. Federal prosecutors charged Binance and Zhao with skirting U.S. banking regulations to attract fraudulent business. Authorities say the company facilitated billions of dollars in unregulated cryptocurrency transactions, providing criminals across the world a way to move stolen money and other proceeds into the banking system. Binance agreed to pay $4.3 billion in fines and restitution. Zhao faces as much as 10 years in prison.

AFFINITY SCAMMERS SHUT DOWN

So-called affinity scammers use common cultural attributes such as language or religion to scam members of their community. A California man was sentenced to more than five years in federal prison in March 2023 for his part in a scam that stole $15 million from more than 30,000 Spanish speakers in the United States. Officials say Luis Rendon, 60, and others operating out of call centers in Peru called Spanish-speaking people and threatened them with arrest if they did not pay bogus “settlement fees” the crooks claimed were required for immigrants.

Joe Eaton writes about health care and Medicare fraud for AARP. He was an investigative reporter for the Center for Public Integrity.

CREDITS, FROM TOP: PHOTO ILLUSTRATION: TYLER COMRIE (PHOTO: GETTY IMAGES); RAFAËL LAFARGUE/ABACA/SIPA USA VIA AP IMAGES; BEBETO MATTHEWS/AP PHOTO

SWIPE FOR MORE >