AARP Hearing Center

Subscribe: Apple Podcasts | Amazon Music Audible | Spotify | TuneIn

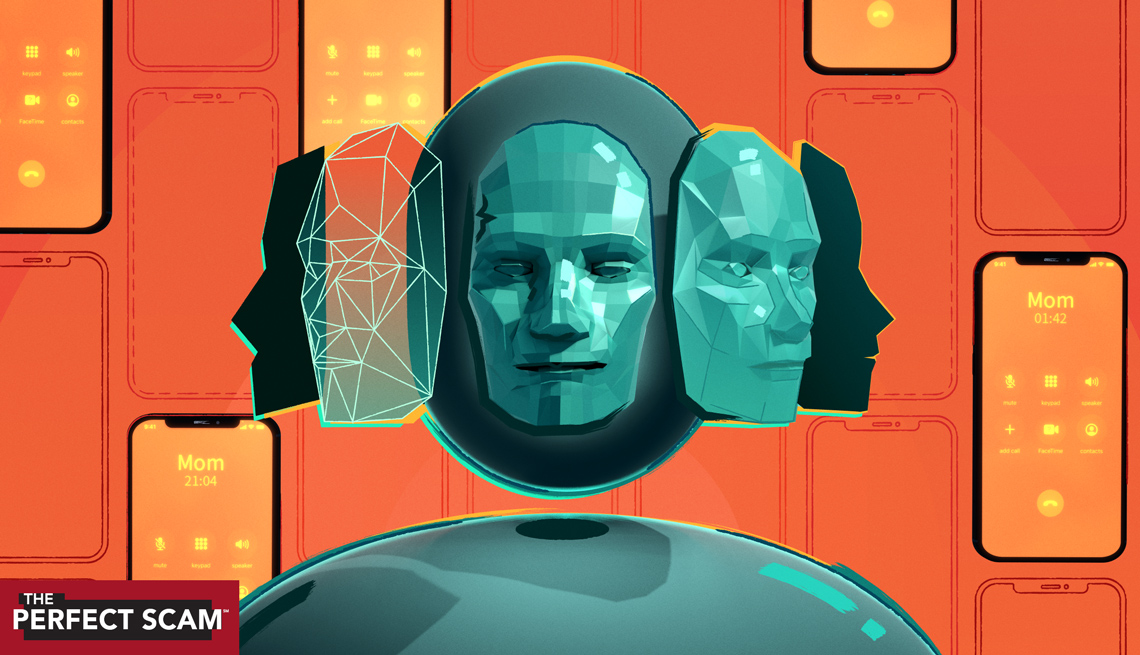

[00:00:00] Hi, I'm Bob Sullivan and this is The Perfect Scam. This week we'll be talking with people about a new form of the Grandparent Scam. One that plays on victims' heartstrings by using audio recordings of loved ones asking for help abroad. What makes this new is that the voices are actually AI generated, and they sound pretty convincing. In fact, you're listening to one right now. I'm not really Bob Sullivan. I'm an AI generated voice based on about 3 minutes’ worth of recordings of Bob on this program. It sounds pretty convincing though, doesn't it?

(MUSIC SEGUE)

[00:00:42] Bob: Welcome back to The Perfect Scam. I’m your real host, Bob Sullivan. Today we are bringing you a very special episode about a new development in the tech world that, well frankly, threatens to make things much easier for criminals and much harder for you; artificial intelligence, AI. If you listen to some people, it's already being used to supercharge the common grandparent scam or impostor scam because it's relatively easy, cheap for criminals to copy anyone's voice and make them say whatever the criminals want. What you heard at the beginning of this podcast wasn't me. It was an audio file generated with my permission by Professor Jonathan Anderson, an expert in artificial intelligence and computer security at Memorial University in Canada. And as I said, well, as that voice said, it sounds pretty convincing. It also might sound kind of like a novelty or even a light-hearted joke until you hear what some victims are saying.

(MUSIC SEGUE)

[00:01:46] At about 4:53 pm, I received a call from an unknown number upon exiting my car. At the final ring, I chose to answer it as "Unknown Calls," we're very familiar with, can often be a hospital or a doctor. It was Brianna sobbing and crying saying, "Mom..."

[00:02:03] Bob: That's the real-life voice of Jennifer DeStefano from Phoenix, who testified before Congress earlier this year about her harrowing experience with a kidnapping scam involving her daughter.

[00:02:15] Jennifer DeStefano: "Mom, these bad men have me. Help me, help me, help me." She begged and pleaded as the phone was taken from her. A threatening and vulgar man took the call over. "Listen here, I have your daughter. You call anybody, you call the police, and I'll drive to Mexico and you'll never see your daughter again." I ran inside and started screaming for help. The next few minutes were every parents' worse nightmare. I was fortunate to have a couple of moms there who knew me well, and they instantly went into action. One mom ran outside and called 911. The kidnapper demanded a million dollars and that was not possible. So then he decided on $50,000 in cash. That was when the first mom came back in and told me that 911 is very familiar with an AI scam where they can use someone's voice. But I didn't process that. It was my daughter's voice. It was her cries; it was her sobs. It was the way she spoke. I will never be able to shake that voice and the desperate cries for help out of my mind.

[00:03:11] Bob: Jennifer DeStefano ultimately tracked down her daughter who was safe at home so she didn't send the criminals any money, but she went through those moments of terror and still struggles with the incident partly because the voice sounded so real. And she's hardly the only one who thinks voice cloning or some kind of technology is actively being used right now by criminals to manipulate victims. Last year, an FBI agent in Texas warned about this use of artificial intelligence by criminals. Then in March, the Federal Trade Commission issued a warning about it. So far, all we have are scattered anecdotes about the use of computer-generated voices and scams though it's easy to see what the potential for this problem is. So I wanted to help us all get a better grasp on what's happening, and for this episode, we've spoken to a couple of experts who can help a lot with that. The first is Professor Jonathan Anderson who you've already met, sort of. Remember, I asked him to make the fake me.

[00:04:09] Jonathan Anderson: I teach students about computer security, so I'm not really a fraud expert. I certainly have some exposure to that kind of thing. I come at it from more of a, of a technical angle, but I guess this all started when there were some local cases of the grandparent scam that were getting run here and they were looking for somebody who is a, a general cybersecurity expert to comment, and, and that's where things started for me talking to people about this issue.

[00:04:33] Bob: So can, can you sort of right-size the problem for me and describe the problem for me at the moment?

[00:04:39] Jonathan Anderson: Sure, I mean, scams are nothing new, of course. And this particular scam of putting pressure on people and saying, oh, your loved one is in a lot of distress, I mean that's not a new thing, but what is new is it's so cheap, easy, convenient to clone someone's voice today that you can make these scams a lot more convincing. And so we have seen instances um, in Canada across the country. There have been people who at least claim that they heard their grandson's voice, their granddaughter's voice, that kind of thing on the phone. And based on the technology that is easily, easily available for anyone with a credit card, I could very much believe that that's something that's actually going on.

[00:05:22] Bob: But wait. I mean I'm sure we've all seen movies where someone's voice is cloned by some superspy. It's easy enough to imagine doing that in some sci-fi world, but now it's easy and cheap to do it. Like how cheap?

[00:05:37] Jonathan Anderson: So some services, uh you can pay $5 a month for a budget of however many words you get to generate and up to so many cloned voices. So a particular service that I was looking at very recently, and in fact, had some fun with cloning your voice, was $5 a month. Your first month is 80% off, and when you upload voice samples, they ask you to tick a box that says, "I promise not to use this cloned voice to conduct any fraudulent activities." And then you can have it generate whatever words you want.

[00:06:11] Bob: Well, you have to click a box, so that takes care of that problem.

[00:06:14] Jonathan Anderson: Yeah, absolutely. So certainly, I don't think this prevents any fraud, but it probably prevents that website from being sued and held liable for fraud perhaps.

[00:06:24] Bob: So did you say $5 a month, 80% off, so it's a dollar?

[00:06:29] Jonathan Anderson: I did, yeah. One US dollar for 30 days of generating, I think 50,000 words in cloned, generated voices.

[00:06:38] Bob: 50,000 words.

[00:06:41] Jonathan Anderson: Uh-hmm, and up to 10 cloned voices that you can kind of have in your menu at any one time, and then if you want to spend a little bit more per month, there are uh, much larger plans that you can go with.

[00:06:53] Bob: That's absolutely amazing.

[00:06:56] Bob: For one dollar you can make someone say anything you want in a voice that sounds realistic? Frankly, I was still skeptical that it was that easy, and definitely skeptical that it can be convincing enough to help criminals operate scams, so I asked Jonathan to simulate how that would work using my voice. And he said, "Easy." What you are about to hear is fake, let's be clear, but here's what a criminal could do with my voice for $1.

[00:07:26] (fake Bob) Steve, I need help. Oh my God! I hit a woman with my car. It was an accident, but I'm in jail and they won't let me leave unless I come up with $20,000 for bail and legal fees. I really need your help, Steve, or I'll have to stay here overnight. Can you help me? Please tell me you can send the money.

I've never felt this way about anybody before, Sandra. I wish we could be together sooner. As soon as I finished up the job on this oil rig, I will be on the first flight out of here. In the meantime, I've sent you something really special in the mail so you know how much I care about you.

I just need some money to help pay for the customs...

Now you've heard me say many times on this podcast to NEVER send gift cards as payment. Scammers will often pose as an official from a government agency and say you need to pay taxes or a fine, or they'll pretend to be text support from Microsoft or Apple and ask for payment through gift cards to fix something that's wrong with your computer. But this time is an exception to that rule. And I am going to ask you to purchase gift cards so I can help you protect yourself from the next big scam.

[00:08:35] Bob: Okay real Bob back here. Wow that’s absolutely amazing, okay so I've been writing about security and, and privacy scary things for a long time and it's pretty common, right, that people make a lot out of, out of things to get attention to themselves and so I'm always on, kind of an alert for that. And to be honest with you, I was like, okay, how good can these things be? I've listened to these clips. I'm alarmed (chuckles).

[00:08:59] Jonathan Anderson: Yep.

[00:09:00] Bob: I've read a lot about this. I knew this was going on. It was still disturbing to hear my own voice produced this way. Have you done it with yourself?

[00:09:06] Jonathan Anderson: I have. So I've generated voices for myself and a few different like media people that I've spoken with, and I played the one of me, and I thought it sounded quite a lot like me. My wife thought it sounded like a computer imitating me, so I guess that's good. Maybe the sample I uploaded wasn't high quality enough, or it might be that people use radio voice when they are media personalities and maybe that's slightly easier to imitate, I'm not 100% sure. But it, it does feel strange to listen to yourself, but not quite yourself.

[00:09:39] Bob: Yeah, did it make you want to shut the computer and go for a walk or anything?

[00:09:42] Jonathan Anderson: Hah, ah, well, doing computer security research makes me want to shut all the computers and go for a walk.

[00:09:48] Bob: (laughs)

[00:09:50] Bob: Okay, so I had to know, how do these services work? It turns out they do not work the way I imagined they do.

[00:09:59] Bob: My emotional response is that what these really are is like someone has somehow cut and pasted individual words like, like an old ransom note that was magazine words cut together and put on a piece of paper, but that's not what it is, is it?

[00:10:13] Jonathan Anderson: No, so there are services that have pretrained models of what a human voice sounds like in a generic sense, and then that model gets kind of customized by uploading samples of a particular person's voice. So we all remember the, the text--, text to speech engines that didn't sound very good where it said, "You - are - opening - a - web - browser." Well those have improved a lot, but now they can also be personalized to sound like a specific person by uploading a very, very small amount of recording of a voice. So I took about three minutes' worth of recordings of your voice, and of course, anyone who uses the internet would be able to get a lot more than three minutes of the voice of somebody who hosts a podcast or somebody who's a media personality or to be honest, anybody who posts a face--, a Facebook video. And when you upload that information, you're able to personalize this, this model of an AI generator, and then you provide a text, and it does text-to-speech, except instead of sounding like a generic voice, instead of sounding like Siri, it kind of sounds a lot like the person whose voice samples you uploaded. The more sample data you have, the more opportunity there is for that generated voice to sound more like the real person, but you can imagine that if you took those voice samples, and you played them ov--, to somebody over a phone which has kind of a noisy connection, and which really attenuates certain frequencies anyway, you could fool an awful lot of people, and you can make these things say whatever words you want. So you can say, you know, "Help me, Grandma, I've been arrested, and I've totaled the car, and uh here's my lawyer. Can you talk to my lawyer about providing some money so that he can get me out of jail?" And then another voice comes on the line and can answer questions interactively.

[00:11:58] Bob: You know that, that's just amazing, I'm so glad you explained it like that. Again, I was picturing now, maybe it’s event the movie Sneakers you know, they have to get a person to saying all those words, and then they can like cut and paste them together. This has nothing to do with that. This is like this is a voice with a full vocabulary that, that's just tweaked with little audio particulars that are sampled from, from people's other speaking, so that you can make the person say anything that you want them to say, and sound like they would sound, right?

[00:12:26] Jonathan Anderson: Absolutely. So yeah in, in the Sneakers' quote that you referenced, "My voice is my passport authorized me,"

[00:12:33] Bob: That's it, yes, yeah.

[00:12:34] Jonathan Anderson: It sounded a little bit odd, right? But now you can have whatever the normal vocal mannerisms of a specific person or not just like the specific tone in which they speak, but also how much they vary their voice; things like that. All of that can be captured, and there are some parameters that you tweak in order to make it sound as lifelike as possible, but if you have high quality voice recordings, you can basically make that voice say anything. And those, those parameters and how much data you have to upload influences exactly how faithful it sounds to the original voice. But even with just a few minutes of sample, it can sound, it can sound pretty darn close.

[00:13:12] Bob: So, you can see, voice cloning technology has come a long way since it was imagined by sci-fi movies of the 1970s and '80s. But you don't have to look too far to see another example of technology that works this way.

[00:13:27] Jonathan Anderson: So I think people used to have this idea of faking images, and, and indeed, faking images used to be a matter of somebody who was really, really good a photoshop going into the tool and dragging an image from one place into another and then having to fix up all the edges and it was, it was kind of a skilled art form almost, but now you can go to some online AI generation tools and say, give me an image that has this image, but where that person is surrounded by ocean or they're surrounded by prison bars, or they're, they're in a group shot with these other people from this other image. And it's kind of like that for voices as well now where we can generate a voice saying whatever we want it to say, not having to just copy and paste little bits and pieces from different places.

[00:14:12] Bob: Let's face it. Hearing a cloned voice that comes so close to real life is downright spooky. In fact, that spookiness has a name.

[00:14:23] Jonathan Anderson: I guess it, it kind of goes to our fundamental assumptions about what people can do and what machines can do, and there's something uncanny about, well there's the uncanny valley effect, but there's also something uncanny about something that you thought could only come from a human being that isn't coming from a human being. I think that that's deeply disquieting in this sense.

[00:14:49] Bob: Hmm, the, I'm sorry, what was the, the uncommon valley effect? What was that?

[00:14:53] Jonathan Anderson: Oh, so the uncanny valley is this thing where you see this in like animation, whereas animation gets more and more lifelike, people kind of receive it in a very positive way, and but there comes a point where something is so close to lifelike, but not quite, that people turn away from it with like revulsion and disgust. And this is something, it makes people feel really strange, and they really don't like it 'cause it's almost human, but not quite. Yeah, so people have written lots about the uncanny valley, and especially in like CGI and stuff. But the same thing kind of applies to I think the technology we're talking about here, if you have a voice that sounds almost like you, but not quite, it sounds really strange in a way that a purely synthetic voice like Siri or something wouldn't sound strange.

[00:15:44] Bob: That's fascinating, but it's, as you were describing it, it hits right close to home. So that makes a ton of sense. Um, as, as did the two uh audio samples that you sent, um, they were uh disturbing and, and hit, hit the point as clear as could be.

[00:16:01] Bob: Okay, spooky is one thing, unnerving is one thing. But should we be downright scared of the way voice cloning works and what criminals can do with it?

[00:16:13] Jonathan Anderson: Um, in the short term, I think we should be kind of concerned. I think we've come to a place after a long time where when you see an image of something that's really, really implausible, your first thought is not, I can't believe that that event happened. Your first thought is probably, I wonder if somebody photoshopped that. And I think we are soon going to get to a similar place with video and being able to generate images of people moving around and talking that seems pretty natural, And in fact, there's some services that although it's not quite lifelike yet, they are, they are doing things like that. But definitely with voices, if you hear a voice saying something that you don't think could be plausible, or that seems a little off to you, you should definitely be thinking, I wonder if that's actually my loved one, or if that's somebody impersonating them using a computer.

[00:17:05] Bob: You know, for quite a while now, at least I think a couple of election cycles, there's been concern about you know deep fake videos of political candidates being used. Um, and so that's where my brain was, and I think that's probably where a lot of people's brains were, that sort of like large scale um, political, socioeconomic attack, um, but using this kind of tool to steal money from vulnerable victims on the internet, seems honestly like a more realistic nefarious way to use this technology.

[00:17:37] Jonathan Anderson: It does, absolutely. And those two things can be related doing mass individually targeted things that people, where you fake a video from a political candidate saying, "Won't you, uh, Bob Sullivan, please donate to my campaign," or something. I mean maybe those two things can be married together, but certainly while people were looking at the big picture, they, well sometimes we forget to look at the details and individual people getting scammed out of thousands, or in some cases tens of thousands of dollars, it might not be a macro economic issue, but it is a really big deal to a lot of people.

[00:18:10] Bob: When I went to school, media literacy was a big term. You know and now it feels sort of like we need a 21st century digital literacy, which kind of comes down to not bling--, believing anything you see or hear. Um, and, and I guess that's a good thing. I, I have mixed feelings about that though, do you?

[00:18:29] Jonathan Anderson: Yeah, so I mean as a security researcher, or when I teach computer security to students, I will often tell them, now if you come into this class wearing rose-colored glasses, I'm afraid to tell you, you won't be wearing them by the end, 'cause we are going to look at things that people can do, and attacks that people can run, and some pretty nefarious things that people can do on the internet using various technologies. But part of the goal there is if we learn what it possible, then we learn what not to trust. And then if we know what not to trust, then we can think in a more comprehensive and fully grounded way about what we should trust, and why we trust certain things, and what are the appropriate reasons to trust certain things that we hear, see, et cetera.

[00:19:16] Bob: I do think that's good advice. Don't trust everything you read or everything you hear, or everything you see, but let's be honest, that is a very tall order, and frankly, I'm not sure how much I want to live in a world when nobody trusts anything.

[00:19:32] Jonathan Anderson: I mean it's going to be a long time before human nature isn't trusting because we are a fundamentally social creature, et cetera. Even things like text, I mean people have been writing false things down in printed form for hundreds of years. And yet, people still believe all kinds of interesting things that they read on Facebook. So I mean that's, dealing with human nature is well outside of my expertise, and I’m afraid I'm not sure what to do with that, but for this specific kind of scam, there are things that we can do, which look a lot like traditional responses to scams, you know, the, the old adage of, "If it sounds too good to be true, it probably is." Well, if it sounds too bad to be true, then maybe we should check. So in this particular scam for example, you will often have people creating a time-limited, high-pressure situation by calling someone and saying, "I'm in jail right now, and you need to talk to my lawyer, and you need to give them cash so that they can get me out of jail." Now first of all, I don't know a lot of lawyers who send couriers to your door to collect bundles of cash, but there are things that you could do such as, okay, well, where are you in jail? Let me call them back. Do you have a lawyer? Is this lawyer somebody I can find in a phone book, and can I call their office in order to arrange for whatever needs to be arranged for? It's kind of the, the old advice about, if you have credit card fraud, well don't tell the person who called you, call the number on the back of your card so that you know who you're talking to. And some of those kind of simple techniques do allow people to take back control of the conversation, and, and they're not kind of glitzy, exciting techniques, because they're not technologically based, but unfortunately, some of the technologically based things that people propose like trying to identify certain glitches in the AI-generated speech, those aren't going to last, because whatever those glitches are, we're going to be able to smooth them out soon enough. So really, we need a more fundamental approach that goes back to the sort of the first principles of, of scams.

[00:21:37] Bob: So, what about the companies involved? So having a checked box that says, "Fingers-crossed, promise you won't use this for fraud." I don't know if that sounds like enough to me. Should we be demanding more of our tech companies who make this technology available?

[00:21:50] Jonathan Anderson: I want to say yes. (chuckles) But in the long run, I don't know that that's actually going to make a difference. If you prevent companies based in western democracies from producing this kind of technology or if you prevent them from selling it to end-users without, I don't know, making the end-users register themselves with the local police or something, it's not going to stop the technology from being developed, it's just going to push it offshore. I think outlawing artificial voices would be a little bit like outlawing Photoshop. You might well be able to make Adobe stop shipping Photoshop, but you're not going to be able to prevent people around the world who have access from to technology from making image manipulation software.

[00:22:35] Bob: There are some situations where companies have developed technologies and simply decided they weren't safe to sell to the general public. That happened at Google and Facebook with facial recognition, for example. What about that as a solution?

[00:22:50] Jonathan Anderson: Yeah, so when you have a large company deciding whether or not they're going to use certain technology with the data that their users have provided them, then that's a slightly different conversation about the, the responsibilities that they have, and the ethics that they have to uphold, and the potential for regulation if they get that wrong, and of course, I guess they want to avoid that at all costs. But when you're looking at something like this where you have a company that is selling individual services to individual users and most of those individual users are probably generating chat bots for their website and other kind of legitimate uses, and a few are scammers, that might be a lot harder to deal with through that kind of a, a good corporate citizen kind of approach.

[00:23:37] Bob: I think the people listening to this topic right now, and in general in the news cycle, you know we are, we are the AI is scary phase, and deep fakes are very, very scary. So what sort of future are we building here?

[00:23:55] Jonathan Anderson: So I understand that when photographs in newspapers were a new thing, that you could find quotes in newspapers from people saying things like, "Well, if they wrote about it in the paper, I'm not going to believe that. But if there's a picture, it really must have happened." And I don't think people have that attitude anymore, because it took a few decades, but people got used to the idea that an image can be faked. And so now when we look at a newspaper, and we see an image, we aren't asking, does that image look doctored, we ask, who gave me this image? And if it's a professional photojournalist with a reputation to uphold at a reputable publication, we believe that the image hasn't been doctored, not because of some intrinsic quality, but because of who it came from. And I think that is kind of a long term, sustainable strategy for this sort of thing where if you can know where, if you can know the source of what you're listening to, if you can know the source or the conduit of who's providing you with a recording, an image, a video, then that's where you need to be making those trust decisions, not based on the quality of the thing itself. I mean it took a while for people to get used to the idea that air brushing images, and then later photoshopping images was a real thing, and it may, unfortunately, take a while for people to get used to this new scam, or I should say this new augmentation of an old scam. And unfortunately, while people are getting used to that idea, there may well be lots of exploitation of that kind of cognitive loophole, but hopefully people will catch up with it soon because just as we've all kind of learned, you shouldn't trust everything you read on the internet, well you also, unfortunately, shouldn't believe everything that you heard over your phone.

(MUSIC SEGUE)

[00:25:50] Bob: Jonathan did a great job of explaining the problem, and a scary good job of using a service to imitate my voice. But there are a lot of people out there trying to figure out what we can do about AI and so-called deep fakes like voice cloning. What can government do to rein in their use by criminals? What can industry associations do? What can the law do? What kind of future do we really want to build? Our next guest is deeply immersed in that conversation right now, and as you can see, it's pretty important we have this conversation right now.

[00:26:26] My name is Chloe Autio, and I'm an independent AI policy and governance consultant. So I help different types of organizations, public sector, private sector, some non-profits, sort of understand and make sense of the AI policy ecosystem, and then also understand how to build AI technologies a bit better.

[00:26:47] Bob: Okay, so that term that you just used there, the AI policy governance ecosystem, what is that?

[00:26:54] Chloe Autio: I would define it as sort of the network of actors and stakeholders, who are developing and deploying AI systems, and also those who are thinking about regulating them and the organizations that are engaging with regulators and these companies. So think, you know, civil society, academics, different types of foundations and organizations like think tanks that are doing interesting work and research on new and emerging topics. The AI policy ecosystem, as I describe it, is, you know, like I said, the network of people sort of thinking about how we can usher in the next wave of this technology responsibly and with guard--, guardrails in a way that also promotes its responsible uptake.

[00:27:38] Bob: Okay, so let's face it, right now every story has AI connected to it, just like every story had .com connected to it 25 years ago or whatnot, right. Um, and most of those stories, well a lot of those stories are scary, are about crime, they're about some sort of dystopian future. Um, how scared should we be about AI?

[00:27:59] Chloe Autio: That's a really good question, Bob. And I think a lot of people are thinking about this. I think every stakeholder in the network that I just described is trying to kind of figure out what, what all of this hype about AI actually means to them, or should mean to them, right? Should they be very scared, should we be talking about extinction? Are those fear--, fears real? Or should we kind of be thinking about the harms that actually exist today and maybe there's, there's a middle ground that we should be thinking about. But I, I think, you know, as with any sort of technology, right, there's a lot of hype and a lot of excitement initially, and then we sort of reach that plateau of, okay, how is this really working in the world? And what are the things that we need to be thinking about in relation to deployment implementation. And I think we're just sort of getting there with generative AI. So some of it remains to be determined, but I do think, and I will say that I think some of these fears, particularly around sort of like longer term risks that we haven't even really defined yet, are, are dominating a little bit of the conversation and, and we need to sort of continue to think about the harms that are happening right now.

[00:29:03] Bob: Harms that might be happening right now such as criminals using voice cloning technology to make their narratives all the more realistic. Okay, if it feels like artificial intelligence has sprung on the scene all at once, well it kind of has, thanks to that automated chat tool you might have heard about called Chat GPT. It seems like everyone is talking about that lately. I asked Chloe to explain first off why should humanity explore artificial intelligence in the first place?

[00:29:33] Chloe Autio: I think again with any, any type of technology, right, there are a lot of really positive benefits in the context of generative AI, right. It provides access to different types of more accessible knowledge than say a Google Search might be able to or you know pulling off an ency--, an encyclopedia off the shelf like we did when I was writing my first research papers in elementary school. But you know I think there are a lot of ways too that AI can broaden and expand access. We're thinking about, you know, voice technologies, which is something we're going to talk about today. You know people with disabilities, individuals with disabilities, people who are maybe speech impaired. These technologies can provide, particularly multimodal AI which is, you know, AI models that can sort of extend and work across different modes. So audio, visual text, that sort of thing can provide really great opportunities for people to be able to communicate with one another and connect. And some of these types of opportunities and, and use cases are really, really powerful and impactful. But you know, I understand the fear. I understand the, and, and it's very real, particularly also because a lot of the coverage about this technology is sort of this pie in the sky, you know, I'm sure everyone's heard The Terminator reference. But what I would say to those people and people who are definitely afraid of this is that you know AI is, is also just a tool. It is something that needs to be implemented and thought about in a very sort of use case and application-specific level, and that takes a lot of people work and intervention and thought and organization and processes, and while it may sort of just pop up on the screen, ala ChatGPT or you know this interface that feels very magic; the reality is that there are a lot of people and systems and, and processes sort of happening behind the scenes to make this all happen. And I would encourage anyone you know to just keep that in mind.

[00:31:24] Bob: So this technology seems to be readily available and having heard my own voice, used it this way, it's scary. So what, what can be done about this? Clearly there's a risk of harm today using this technology.

[00:31:38] Chloe Autio: Correct. Yeah, and I think that this is one of those areas that's new, New to think about. I mean we've all been talking about, you know, AI governance, technology governance, how to you know proceed responsibly and sort of carefully with the development of emerging technologies. But with generative AI and you know the way that it's just sort of skyrocketed, the application of deep fakes, um, we have a lot more to really consider and you know what I would say is in terms of sort of solutions here, I think the consumer awareness is, is really an important part of this, and I think it's great the FTC came out and said, you know, hey, let's be on the watch for this. But the reality is is that, you know, everyday people can't be, can't, I can't possibly expect them, even myself, right, to be totally knowledgeable about all of these scams and all of the potential for scams. Like I said, this landscape has just completely evolved and evolved really quickly. And so to expect that people are just going to know or be able to differentiate, you know, these types of scams from, from the truth, uh, is, is, is really quite a tall order.

[00:32:39] Bob: Especially when they're taking my voice and making it sound exactly like me. I mean how is my mom supposed to discern those things?

[00:32:45] Chloe Autio: Absolutely. You're 100% right. My mom, you know my, my grandfather, me, it, it, it's just really, really tricky. But, but I do think that, you know, sort of raising the awareness, having victims come testify in front of Congress, you know, this type of coverage on you know the evening news is really, really important. But there are, it, it really sort of implicates bigger, bigger questions, right, about trust and technology and, you know, whose responsibility it is when we have these emerging technologies to kind of be in control of, of making sure that, that they don't take over or sort of, you know, get too advanced, or advance too quickly.

[00:33:20] Bob: Okay, so what kind of things can be done to make sure voice cloning and other AI technology doesn't get too advanced too quickly?

[00:33:30] Chloe Autio: Yeah, so I mean watermarking is a technique that can be used again in multimodal situations, uh and one that has emerged as, as sort of one of the leading solutions lately. It involves imbedding either a visible or an invisible signal in, in content. And that can be in audio content, it can be on a photo, a, a very obvious visual signal would be like the Getty images water stamp, or watermark that you see on a lot of photos that are, you know, coming up in Google Search results or whatnot. An imperceptible or invisible, or invisible watermark would be something like a hash or an imbedded signal sort of on the data or the metadata level that is not visible to the human eye, but that tells someone who may be developing or you know feeding that type of data into a model, particularly a generative AI model, that that content has or has not been developed by AI or, or watermarked to say, you know, this is an AI generative piece of content or is in fact a human generated piece of content. It can go either way.

[00:34:30] Bob: Metaphor alert: Um, it's so hard to explain these things, you know, in short spans of time. Uh, so let me give you what, what I heard as a rough metaphor for some of these technologies. I, I imagine as you were describing ways that, like a hash verification would work, where it would prevent a crime like this, is there might be a, when, when I get a phone call in the future, there might be something in my telephone that can verify, oh this is, this is really your mom calling, this isn't fake.

[00:35:00] Chloe Autio: Yes, I think that's exactly right, it would be like a signal at a, at a band width that would be imperceptible to the human ear, but maybe that, you know, telecommunications companies or cell providers will be able to integrate into the audio sound and verify sort of on the backend. That would be a great example of an imperceptible watermark that would really help build trust and sort of authentication, right in audio and in phone calls that are going, going across the line.

[00:35:29] Bob: Okay, that's really interesting. And we, we sort of have systems like that in email, although they're, they're quite imperfect, but, you know...

[00:35:35] Chloe Autio: Right.

[00:35:36] Bob: Emails do try to authenticate uh, the senders and whatnot, right?

[00:35:40] Chloe Autio: That's right, yeah. Um, another example too when we think about, you know is the little lock on your uh browser, the URL window, right? I mean that's obviously a perceptible sort of key or certificate, right? I heard that analogy in this context or you know being sort of applied to this context; I think it actually makes a lot of sense, right. There's become this sort of perceptible signal or logo or, I guess it's not a logo, but a, but an icon, right, that is kind of synonymous with trust. And obviously, that won't make sense in every context, it'll be impossible for every consumer to be constantly having to validate, you know, whatever type of content is coming through, but if we can think about that, that, that analogy, kind of like the one that you raised, you know, uh, that may, that may help some of this and help give consumers some more confidence in, in the types of material that they're seeing, or hearing, or listening to.

[00:36:33] Bob: Or hearing, yeah, yeah.

[00:36:34] Chloe Autio: Yes.

[00:36:35] Bob: No, and it certainly is, is reasonable to think that you know I could have a list of 20 people um, all of whom, you know I would, I would, I would jump if they said I'm in trouble around the world. That list isn't too big for all of us, right, and, and those people have some kind of special relationship with me technologically to verify their authenticity, something along those lines.

[00:36:53] Chloe Autio: Absolutely.

[00:36:55] Bob: Another important tool that can help us is having people who work at these companies which make this technology think a lot more about how their tools will be used when they are released to the world.

[00:37:08] Chloe Autio: This involves having people in the loop when these technologies are being developed, you know, people who understand sociotechnical systems and context. This the, the scenarios or settings in which these technologies will be deployed, and the data from which or, or the data on which these technologies are being trained, to come in and say, you know, what are the things that we're not thinking about when we're developing these technologies or making them for someone or providing them for someone. We're going to need a lot of human oversight involvement in the development of generative AI, and we can't rely on just tech alone to fix it for us.

[00:37:42] Bob: Someone should be sitting there saying, what unexpected harm could come from this technology before we release it to the world. And one of those harms, if you have the bright idea to have a website where for $5 you can fake anyone's voice, is someone sitting in the room could logically this will almost certainly be used to steal money from vulnerable people. We should do something about that. So how would that work?

[00:38:05] Chloe Autio: It's really different for every organization, Bob. I think anyone who's spent time like I have developing responsible AI systems or technology governance systems for emerging tech like AI, within enterprise or company, or even a small organization, asking those questions of sort of, what can go wrong here, are so, is so, so, so important. Documenting risks. There's a lot of well-known risks, be them risks to human rights, to privacy, to copyright infringement, to sort of fraud or impersonation. A lot of these harms and risks that we've sort of been talking about are things that are, that we know about, that, that have been going on since before, you know, technology development and certainly before, you know, AI. And so trying to figure out how and when different technology applications might implicate these harms or might, might create these harms, is really, really important. And having those voices in the room, those teams uh to think about these issues is really, really important. And just to get more granular about that, you know, this could look like developing something like a use, a use case policy or a sensitive use case policy or a prohibited use case policy, right. If you can buy my service to impersonate your voice for a few bucks online, if I'm that business owner, right, I probably want to have some, some requirements or guidelines about what, what buyers of my technology should or should not be thinking about so that I'm not getting in trouble later for the ways that they are using it for harm, or to, you know, distort people, all of these things. So really, really having those, those hard discussions and considerations kind of in place before these technologies are out on the market is really important and obviously, as we well, as you well know, that doesn't always happen. But really thinking about the uses you know, manipulation, fraud, deception, and which ones are really acceptable for new products is a really, really good step to take.

[00:39:58] Bob: There is no obvious technological bullet-proof solution, however.

[00:40:04] Chloe Autio: You know some of the, some of the solutions that have been proposed to help with some of this audio scamming are very human in essence. So hang up the phone and call the number back and you know, is, is it the person who, who they were saying they were? Maybe it's not. To your point, Bob, try to contact the person who's being impersonated in another way or someone that you know is, is always very close to them. Again, these are super human, these are super, as in very, human fixes. Not superhuman fixes. But it, it, they may be the best ways to outsmart these technologies which I think all of us, I mean some of us will come to find, are not, in fact, magic nor intelligent.

[00:40:45] Bob: I, I don’t know, about you, but I fully expect my technology to outsmart me if it hasn't already.

[00:40:50] Chloe Autio: (laugh) Maybe in some ways, but I, I think that the uh, like I said, the very human interventions to verifying, you know, the AI scams, I, I think is a great place to start if anything like this happens, and in fact, you know, was sort of the solution for a couple of the, or maybe not solution, but provided some peace and resolved the situation for a couple of the scams that I was reading about.

[00:41:12] Bob: Sure, sure, and I'm going to call that, however, the last line of defense, and I want something better.

[00:41:17] Chloe Autio: Yeah, I agree, Bob. I firmly agree. I firmly agree.

[00:41:21] Bob: This is an issue that we, this sort of liability concerns of people who sell products hits every industry. Um, but so I want you to react to my emotional impulse to all of this, which is, if your website is being used primarily by criminals to steal money from people, I want you to be arrested.

[00:41:40] Chloe Autio: I think that that's a totally fair emotion, Bob, and I actually share it, right. There's a whole ecosystem of, of these organizations and companies that are obviously profiting off of creating these technologies with, with no real, you know consideration or, or a thought about the harms.

[00:41:58] Bob: Or consequence.

[00:41:59] Chloe Autio: Or consequence.

[00:42:00] Bob: I don't see them getting arrested, yeah.

[00:42:01] Chloe Autio: They're not. They're not, and I think sadly, you know, we could have a, you know, many hours' long podcast about this, but you know the regulators like the FTC really need more resources to be able to investigate and, and, and pursue and enforce these types of crimes. And, you know, we could talk about that in terms of privacy violations, we could talk about it in terms of children's privacy and social media. I mean all of these things, but you're right, there are these sort of nascent markets of, of really harmful and sort of insidious technologies that crop up. And it's really, really important that, you know, the people that are in charge of being able to investigate and enforce these harms are able to do so. And I think what we're seeing right now is that unfortunately they, they just don't have the resources and time. And that's, you know, that's it, it only hurts the consumer, right, but it also hurts, right, it primarily hurts the consumer, but you know also just sort of the general ecosystem of, of technology and trust in technology, right?

[00:43:00] Bob: Oh the, the more that crime gets associated with um, AI, the, the less we'll end up with the benefits of AI, of course, people will be afraid of it.

[00:43:10] Chloe Autio: Yeah, I think that, yeah, I think you're right, Bob. I think that can be said for anything, right? And such a good ob--, such a good and just a blunt observation. It's so true.

[00:43:20] Bob: Okay, so you are here to save me from my bluntness, because I can hear a chorus of people listening to what I just said and great, the Bob Sullivan Police Force is going to arrest everyone in the tech field. Um, it, and in fact, I think it's probably debatable that what I have described is even illegal. If you offer a service online, it lets you, for amusement, create deep fake audios of people. Do we, we don't want that to be illegal, right? So, so talk me off my, my ledge of wanting to arrest everyone involved in this.

[00:43:48] Chloe Autio: I love, Bob, your Bob Sullivan Police Force. That's a great one, and I certainly would take it seriously. But I think you raise an important point, that in this situation right now, it's not really clear sort of where, where the law starts and stops, or where policy and law really applies. We haven't really seen a lot of robust guidance about, you know, how to regulate these technologies. But I do want to talk about some areas of the law that sort of do apply, right? Fraud and impersonation, we've talked about that a lot. That's definitely illegal. Copyright infringement is another one. For example, for these companies or organizations that are selling AI voice generated models or generative AI voice models, to train and to produce the output which is, you know, the fake voice that these companies are providing as a service, they need to train models on a lot of voice content, right? So some of that content can be in the public domain. There are not entirely clear rules about where and when that content can be straped for model development. Sometimes, as we're seeing with some of these open suits with open AI and other AI--, generative AI companies, that content can be copyrighted. And so you know some of these voice generation companies may be infringing copyright. The third area that I think we could talk about as well is obviously privacy violations. You know, whether not the data that was used to train these models was in the public domain or private, maybe they were using both types of sources, but again, if there was you know data sources that people sort of expected to be private or otherwise belong to them or you know were recordings that they expected not to be shared, we may be talking about privacy violations. And obviously, we don't have a comprehensive privacy law in the US, but I think what's really needed is to kind of develop new guidance and regulation around how certain areas of the law apply in these contexts. And I know that regulators and members of Congress, and you know, others are working really carefully on this, and really, really hard on this, but, but we certainly have, have a ways to go. And, and I understand though the interest and the drive to, to want to pursue these folks to the police force because in absence of, of this clear regulation, rules, and really solid enforcement, it's going to be really hard to kind of keep the bad actors from doing what they do.

[00:46:09] Bob: And I think this is why what you do is so important and I, and encourage people to find out more about it, um, it's sometimes policy work seems wonky or not interesting, but of course, it's the foundation uh which we need to prevent all these bad things. Um, but let me try to put a fine point on this part. Um, there's stuff in the world that happens that everyone can sort of look at and say, well that's wrong. But we may not necessarily have a rule, law that makes it criminal, what we usually do in that situation is try and like try and drag an old law and sort of like, square peg/round hole, shove it in, right, uh, um, which is some of what's happening in the copyright situation. Um, and, and some--, sometimes that's perfectly adequate, and there's a whole set of people who prefer that. Um, the other idea is a new law that regulates things like deep fakes. Um, do, do you have ideas about what you prefer and, and if, if so, what kind of new law or regulation do you think might, might be helpful?

[00:47:06] Chloe Autio: Yeah, this is the million-dollar question, Bob, truly. Maybe it's a billion dollar one by now, I'm not really sure. But I think that, you know, that the sort of square peg/round hole analogy is a good one because I think that there's a lot of focus on trying to say, well what can we use and, and how can we use it? How can we adapt it? But I actually think that that approach is really, really important, right, because AI, again, like any other technology, is, the harms and risks of the technology are, are very specific to the context in which the technology is being deployed or used, right. So for example, AI used to vet resumes. The harms there are very different than you know I used to monitor sensors in a jet engine for example. And I don't know if I would want, you know, a regulator for AI to be making rules in both contexts, knowing that we have you know wonderful lawyers who are trained in understanding discrimination and employment and equal opportunity; I would trust them to make these rules and think about these rules in the context of employment access, right, and inclusion, and any discrimination. I have sort of this issue with this thought around creating, you know, one sort of AI regulator to rule them all, because while technical expertise is really, really important to understanding these technologies, they are deployed out in the world where people and laws and norms and values already exist. And so, you know, leveraging those laws, be they related to labor or employment or, like I said, housing discrimination, preventing housing discrimination, or highway safety, right, I want the expertise from those domains really to be leading in hand or/and in partnership with, with technical experts. But I don't think that this, you know, notion of having sort of one blanket AI law or one blanket AI regulator is going to be the solution, because where the harms and risks that we're trying to mitigate are happening in the world, we have people who deeply understand those, those risks and harms, and they're not always technical, right.

[00:49:16] Bob: Of course, in fact, almost certainly they're from the other side of the world, right?

[00:49:19] Chloe Autio: Correct, correct, correct.

[00:49:21] Bob: Yeah, yeah, yeah. You do a good job of laying out how complex this is.

[00:49:24] Chloe Autio: It's, it's very, it's very complex indeed, but uh, maybe we'll figure it out some day. I'm hopeful. (laughs)

[00:49:32] Bob: Okay, so what do you want to leave people with. They've just listened to this conversation. We've scared them about fake voices that might help criminals steal their money. We've hinted a little bit that AI can do a bunch of good things too. You're at the center of all of these people trying to make these sort of structural decisions. Wh--, what do you want to leave people with?

[00:49:51] Chloe Autio: Hmm... I want to tell people that, that they should always trust their gut. I think that there is this sort of magic quality to these technologies and particularly so in the way that powerful people in our world are talking about them, right? Heads of state, leaders of these companies, you know, billionaires from Silicon Valley, and those people are all very detached from how these technologies are being used by everyday people, by, you know, the listeners to your podcast, even by me, you know, me, anyone, right? And so I just, I just want people to remember to trust their gut. If something feels wrong with the phone call, right, hang up. Don't feel like you have to have all the answers or know everything about how these technologies work because I think I see a lot of that too. You know, just being uncomfortable with the allure and the might and sort of the power these technologies are, are being promised to have. And, and in, and in reality, you know, they are systems that require human development, intervention, and thought, and oversight, and that's where we need to keep sort of reminding ourselves. But most importantly to sort of trust your gut when you're in a sticky situation with technology or you know curious about it or scared of it, like uh that gut feeling is probably right, investigate it or leave it alone. But, but don't sort of lose your, your human aspect or human element when you're, when you're thinking about or working with these technologies. That's what I would say.

[00:51:21] Bob: Don't lose the human element. I'd like to add a big amen to that.

(MUSIC SEGUE)

[00:51:38] Bob: If you have been targeted by a scam or fraud, you are not alone. Call the AARP Fraud Watch Network Helpline at 877-908-3360. Their trained fraud specialists can provide you with free support and guidance on what to do next. Our email address at The Perfect Scam is: theperfectscampodcast@aarp.org, and we want to hear from you. If you've been the victim of a scam or you know someone who has, and you'd like us to tell their story, write to us or just send us some feedback. That address again is: theperfectscampodcast@aarp.org. Thank you to our team of scambusters; Associate Producer, Annalea Embree; Researcher, Sarah Binney; Executive Producer, Julie Getz; and our Audio Engineer and Sound Designer, Julio Gonzalez. Be sure to find us on Apple Podcasts, Spotify, or wherever you listen to podcasts. For AARP's The Perfect Scam, I'm Bob Sullivan.

(MUSIC OUTRO)

END OF TRANSCRIPT

This week, we are bringing you a very special episode about a new development in the tech world that threatens to make things much easier for criminals – artificial intelligence. AI is already being used to supercharge the common grandparent and impostor scams because it’s relatively cheap and easy to clone a person’s voice and make it say whatever you want. We use examples with Bob’s own voice to show you how it’s being done and what to look out for.